| (42) | |||

| (43) |

Semi-parametric nonlinear mixed-effects (SNM) models extend

current statistical nonlinear models for grouped data in

two directions: adding flexibility to a nonlinear mixed-effects

model by allowing the mean function to depend on some non-parametric

functions, and providing ways to model covariance structure and

covariates effects in an SNR model. An SNM model assumes that

Let

![]() ,

,

![]() ,

,

![]() ,

,

![]() ,

,

![]() ,

,

![]() and

and

![]() .

The SNM model (

.

The SNM model (![]() ) and (

) and (![]() ) can then be written in a

matrix form

) can then be written in a

matrix form

|

(44) |

For fixed

![]() and

and ![]() ,

we estimate

,

we estimate

![]() ,

,

![]() ,

,

![]() ,

,

![]() as the minimizers

of the following double penalized log-likelihood

as the minimizers

of the following double penalized log-likelihood

Since

![]() may interact with

may interact with

![]() and

and

![]() in a complicated

way, we have to use iterative procedures to solve (

in a complicated

way, we have to use iterative procedures to solve (![]() )

and (

)

and (![]() ). We proposed two procedures in

Ke and Wang (2001) for the case when

). We proposed two procedures in

Ke and Wang (2001) for the case when ![]() is linear in

is linear in

![]() . It is not difficult to extend these procedures to the general case.

In the following we describe the extension of Procedure 1 in

Ke and Wang (2001).

. It is not difficult to extend these procedures to the general case.

In the following we describe the extension of Procedure 1 in

Ke and Wang (2001).

Procedure 1: estimate

![]() ,

,

![]() ,

,

![]() ,

,

![]() and

and ![]() iteratively using the following three steps:

iteratively using the following three steps:

(a) given the current estimates of

![]() ,

,

![]() and

and

![]() ,

update

,

update

![]() by solving (

by solving (![]() );

);

(b) given the current estimates of

![]() and

and

![]() ,

update

,

update

![]() and

and

![]() by solving (

by solving (![]() );

);

(c) given the current estimates of

![]() ,

,

![]() and

and

![]() ,

update

,

update

![]() and

and ![]() by solving (

by solving (![]() ).

).

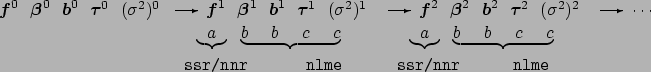

Note that step (b) corresponds to the pseudo-data step and step

(c) corresponds to part of the LME step in Lindstrom and Bates

(1990). Thus the nlme can be used to accomplish (b) and (c).

In step (a) (![]() ) is reduced to (

) is reduced to (![]() ) after certain

transformations. Then depending on if

) after certain

transformations. Then depending on if ![]() is linear in

is linear in

![]() ,

the ssr or nnr function can be used to update

,

the ssr or nnr function can be used to update

![]() .

We choose smoothing parameters using a data-adaptive criterion such as

GCV, GML or UBR at each iteration.

.

We choose smoothing parameters using a data-adaptive criterion such as

GCV, GML or UBR at each iteration.

To minimize (![]() ) we need to alternate between steps (a) and (b)

until convergence. Our simulations indicate that one iteration is

usually enough. Figure

) we need to alternate between steps (a) and (b)

until convergence. Our simulations indicate that one iteration is

usually enough. Figure ![]() shows the flow chart of Procedure 1 if we

alternate (a) and (b) only once. Step (a) can be solved by ssr or

nnr. It is easy to see that steps (b) and (c) are

equivalent to fitting a NLMM with

shows the flow chart of Procedure 1 if we

alternate (a) and (b) only once. Step (a) can be solved by ssr or

nnr. It is easy to see that steps (b) and (c) are

equivalent to fitting a NLMM with

![]() fixed at the current estimate

using the same methods proposed in Lindstrom and Bates

(1990). Therefore these two steps can be combined and solved by S

program nlme (Pinheiro and Bates, 2000).

Figure

fixed at the current estimate

using the same methods proposed in Lindstrom and Bates

(1990). Therefore these two steps can be combined and solved by S

program nlme (Pinheiro and Bates, 2000).

Figure ![]() suggests an obvious iterative algorithm by calling

nnr and nlme alternately. It is not difficult to

use other options in our implementation. For example, we may alternate

steps (a) and (b) several times before proceeding to step

(c). In our studies these approaches usually gave the same results.

For details about the estimation methods and procedures, see

Ke and Wang (2001).

suggests an obvious iterative algorithm by calling

nnr and nlme alternately. It is not difficult to

use other options in our implementation. For example, we may alternate

steps (a) and (b) several times before proceeding to step

(c). In our studies these approaches usually gave the same results.

For details about the estimation methods and procedures, see

Ke and Wang (2001).

|

Approximate Bayesian confidence intervals can be constructed for

![]() (Ke and Wang, 2001).

(Ke and Wang, 2001).